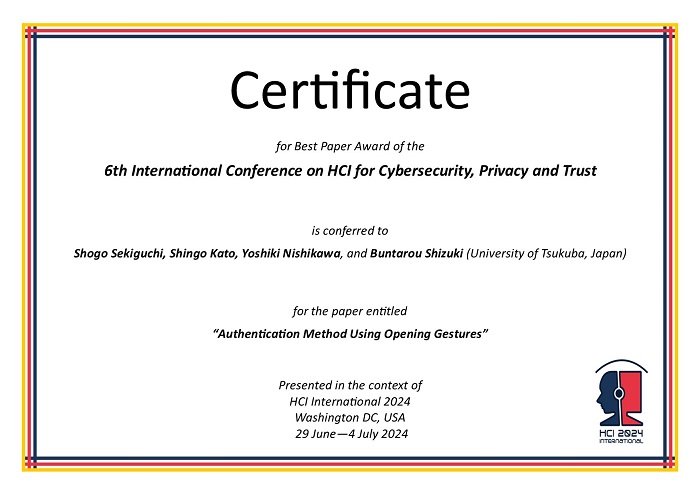

The Best Paper Award of the 6th International Conference on HCI for Cybersecurity, Privacy and Trust

has been conferred to

Shogo Sekiguchi, Shingo Kato, Yoshiki Nishikawa, and Buntarou Shizuki

(University of Tsukuba, Japan)

for the paper entitled

"Authentication Method Using Opening Gestures"

Shogo Sekiguchi

(presenter)

Best Paper Award for the 6th International Conference on HCI for Cybersecurity, Privacy and Trust, in the context of HCI International 2024, Washington DC, USA, 29 June - 4 July 2024

Certificate for Best Paper Award of 6th International Conference on HCI for Cybersecurity, Privacy and Trust presented in the context of HCI International 2024, Washington DC, USA, 29 June - 4 July 2024

Paper Abstract

Smart locks can be used to improve door security. However, code- (e.g., PIN and passwords) and biometric-based (e.g., fingerprints and faces) authentication methods in smart locks can be spotted, limiting their usability in real life. In this study, we present an authentication method that uses a gesture to open a door (opening gesture). In this method, the user performs their own opening gesture to open a door, then authentication is performed based on the unique behavioral characteristics of their opening gesture. This design may have the following merits. First, the user can design their opening gesture in a way that reflects their preferences, making the gesture easily memorable. Second, it is difficult to imitate the movements since they inherently contain individuality. Finally, an opening gesture, unlike a biometric features such as a face or fingerprints, can be changed if it is duplicated by an attacker. To examine the idea of our authentication method, we asked participants to design their own opening gestures and perform them for data collection. Capacitive sensors, pressure sensors, and an IMU were used to measure the movements of the gestures. The results showed that a Random Forest with 11 gestures could reach an average precision rate of 0.816 and an average FAR of 0.015. Our shoulder hacking experiment with 8 participants showed that our system archived a FAR of 0.000 for the imitated gestures by nonusers. These showed resistance to imitation by attackers.

The full paper is available through SpringerLink, provided that you have proper access rights.